Introduction to etcd and Its Role in Kubernetes

Welcome to our exploration into the heart of Kubernetes: etcd. As integral components of any Kubernetes setup, understanding these two in conjunction is vital for managing and operating any Kubernetes environment effectively. In this first part of our series, we aim to uncover the symbiotic relationship between Kubernetes and etcd.

Understanding etcd

etcd is not your typical database. It is a distributed, reliable key-value store developed by CoreOS, designed explicitly for shared configuration and service discovery. It's simple yet powerful - providing APIs for setting, getting, and watching keys.

The Power of etcd: Consistency, Fault Tolerance, and Raft

The true power of etcd lies in its ability to provide strong consistency and fault tolerance. This is achieved thanks to its use of the Raft consensus algorithm, a popular method used in distributed systems to reach agreement among distributed processes.

Raft works by electing a leader amongst the etcd instances in a cluster. The leader is responsible for handling all client requests that change the state of the system (e.g., put, delete). It appends an entry to its log and broadcasts it to the follower nodes. The followers then append this log entry to their own logs, ensuring that each etcd instance agrees on the state of the system and thus, maintaining consistency across the cluster.

This ability to provide a consistently replicated log of transactions makes etcd robust and resilient, able to cope with failures such as network partitions or hardware malfunctions. When a leader fails, the remaining followers hold a new election and select a new leader, ensuring high availability and continued operation of the cluster.

For those interested in a more comprehensive understanding, we highly recommend reviewing the original Raft paper, aptly titled "In Search of an Understandable Consensus Algorithm". It's written by Diego Ongaro and John Ousterhout from Stanford University and offers an excellent breakdown of the algorithm's mechanics, use cases, and benefits.

Etcd in a Kubernetes Cluster

Within the confines of a Kubernetes cluster, etcd operates as the chief data store, enshrined as the ultimate arbiter of truth for the cluster. Its duties involve storing both the configuration data of the cluster as well as its present state.

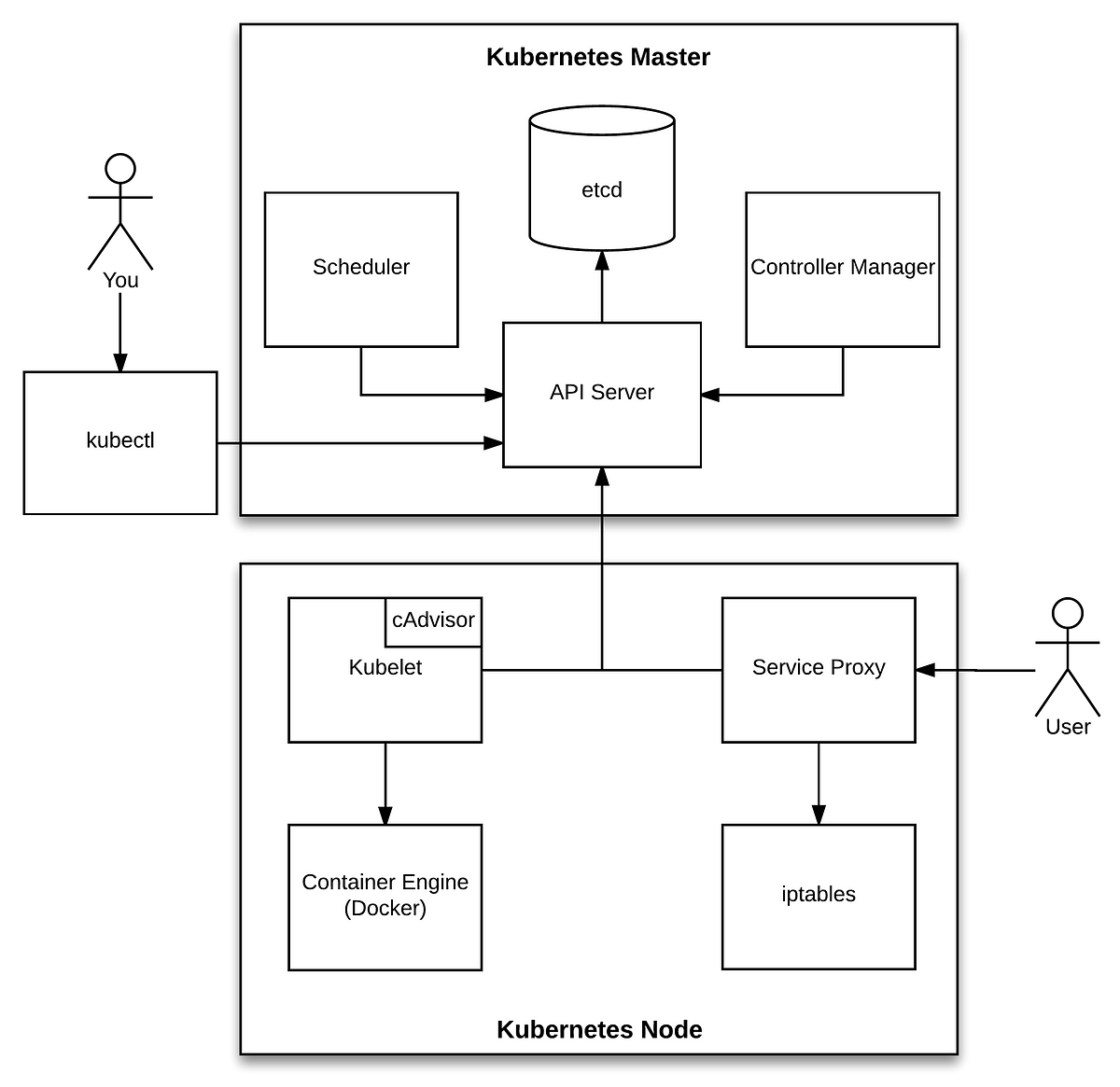

In a Kubernetes setup, most components don't interact directly with etcd. Instead, their interactions are mediated by the Kubernetes API server. The API server provides a robust, unified API, encapsulating the inner workings of etcd away from other components. This abstraction ensures consistent, coordinated interactions with etcd.

Take, for example, the process of scheduling a pod. When the Kubernetes Scheduler needs to allocate a node to an unscheduled pod, it doesn't query etcd directly. Instead, it communicates with the API server, which fetches the necessary data from etcd. Using this information, the Scheduler then decides the appropriate node for the pod.

Furthermore, both the Scheduler and various controllers maintain a watch on the API server for changes to their respective resources.This allows these components to react almost in real-time to changes in the cluster state.

In essence, etcd serves as the bedrock upon which the state of a Kubernetes cluster rests. It is the storehouse where all critical data resides. Meanwhile, the Kubernetes API server acts as a gatekeeper, mediating interactions and ensuring that they are synchronized, consistent, and secure, thereby protecting the integrity of etcd.

Exploring Kubernetes etcd Data with etcdkeeper

Gaining a profound understanding of etcd's role within Kubernetes can be significantly enhanced by visually exploring the data it holds. In this demonstration, we're going to use etcdkeeper, an accessible web-based GUI client for etcd, to navigate our cluster's data on a Minikube environment.

Before we can dive into the data exploration, we need to deploy etcdkeeper to our Kubernetes cluster. For this, we have prepared a pod definition that deploys etcdkeeper to Minikube. Here is the Kubernetes command to deploy etcdkeeper:

kubectl apply -f etcdkeeper-pod.yamlPlease make sure to replace etcdkeeper-pod.yaml with the path to your pod definition file. The pod definition used in this demonstration is as follows:

apiVersion: v1

kind: Pod

metadata:

name: keepr

labels:

app: keepr

spec:

nodeSelector:

kubernetes.io/hostname: minikube

containers:

- name: keepr

image: evildecay/etcdkeeper:latest

command: ["/opt/etcdkeeper/etcdkeeper.bin"]

args: ["-h", "0.0.0.0", "-p", "8080", "-usetls", "-cacert", "/certs/etcd/ca.crt", "-cert", "/certs/apiserver-etcd-client.crt", "-key", "/certs/apiserver-etcd-client.key"]

volumeMounts:

- name: certs

mountPath: /certs

readOnly: true

volumes:

- name: certs

hostPath:

path: /var/lib/minikube/certs

type: Directory

Once you've deployed etcdkeeper to your Kubernetes cluster, you'll need to connect it to your etcd data store. For that, we can retrieve the etcd client URL from the etcd configuration on our Kubernetes node with the following command:

minikube ssh

sudo cat /etc/kubernetes/manifests/etcd.yaml | grep https | cut -d "/" -f 3

This command will return the etcd client URL, typically in the format 192.168.49.2:2379, which you'll need to use in your etcdkeeper configuration, ensuring it's prepended with "https://" as etcd mandates secure connections.

Now, with etcdkeeper successfully connected to the etcd data store, you're free to explore the key-value pairs that reflect the current state of your cluster. For instance, inspecting the /registry/pods/ directory will show all the pods running in your cluster, each represented by a unique key. Delving into these keys, you'll uncover comprehensive information about each pod, stored in a structured data format.

To illustrate, let's look at the /registry/pods/keepr .

This provides detailed information about the etcdkeeper pod we deployed, showcasing the richness of the data etcd holds.

Conclusion

In this part of the series, we introduced etcd, highlighted its pivotal role in a Kubernetes cluster, and explored its data using etcdkeeper. In essence, etcd and the API server form the control plane's backbone, driving Kubernetes and ensuring smooth operation.

In the upcoming Part 2 of this series, we will dive deeper into the internals of etcd, discussing topics such as Protocol Buffers (protobuf) and Write-Ahead Log (WAL). Stay tuned for more fascinating insights into the world of Kubernetes and etcd.

References:

Useful links: